AI in HR: A Peek Into the Future

Explore the evolving landscape of HR, offering a glimpse into how AI is poised to shape the future of human resources management.

What happens when algorithms start doing the jobs humans once performed? For some, it’s transformational. For others, it’s disruptive.

One thing’s certain: the world of work as we know it is changing, and it’s changing fast. It seems like artificial intelligence (AI) is now present in meeting rooms, operates on factory floors, and is even part of decision-making itself.

But at what cost, and what gets lost in the tradeoff?

In this article, we’ll explore the pros and cons of AI in the workplace and share ways to adopt this powerful tool responsibly.

In simple terms, AI in the workplace refers to using machines and software that can “learn” and perform tasks that workers traditionally do.

In its current and broad-reaching form, artificial intelligence shows up across almost every industry and for a range of functions:

Today’s AI systems can write code, generate marketing content, conduct preliminary job interviews, and even make hiring recommendations.

The latest surveys show that more than three-quarters of organizations have already adopted AI in at least one area of their operations.

Here’s what makes AI in the workplace different from regular consumer applications: it operates within existing organizational structures and affects multiple stakeholders.

As such, this complexity brings a new set of pros and cons to the debate of AI in the workplace.

When applied thoughtfully, the benefits of AI in the workplace are hard to ignore.

The human brain processes information at roughly ten bits per second, which, in simple terms, means that it is remarkably slow.

However, humans have always compensated for our cognitive shortcomings by creating tools that enhance our mental capabilities.

AI is simply the latest evolution in this tool-making tradition.

Chances are, you are already using AI in some capacity while at work. Whether it’s drafting emails, generating meeting summaries, or reviewing data analysis, these small and seemingly insignificant actions are saving you valuable time.

In reality, nine out of ten workers say AI has helped them save time on work tasks.

Adding to that, a Slack survey found that 81% of desk workers who use AI feel the technology is improving their productivity and is especially helpful with writing, research, and workflow automation.

Automation through AI not only saves time but also often improves accuracy and consistency. An AI system can run 24/7 without tiring, performing routine processes the same way every time.

As a result, businesses make fewer errors and have more reliable outputs.

Human intuition, though valuable, is often limited and flawed.

We make decisions based on recent memories, fall for cognitive biases, and struggle to process large data sets objectively.

Then, on the other side of the spectrum, we have AI that can quickly process gigantic datasets in seconds to spot patterns or predict outcomes that would be easy to miss otherwise.

When combined, human judgment and AI’s processing power lead to faster, more informed decisions.

In healthcare, diagnostic AI doesn’t replace doctors, but instead helps them catch conditions they might overlook. Or, in areas such as finance, AI models assess potential risks, but people make the final call after weighing context and nuance.

As a result, more and more companies are capitalizing on these strengths to elevate their strategic decision-making.

For example, IBM famously developed an AI program that could predict with 95% accuracy which employees were likely to quit. As a result, the company has been able to save around $300 million in retention costs.

Executives recognize this value: many have pointed out that AI’s analytical capabilities enable better decision-making by grounding choices in data.

Forward-thinking organizations view AI not as an expense but as an investment that, if executed correctly, pays for itself.

Best of all, there is already evidence to support this claim: companies leading in AI are achieving significant financial benefits.

A Boston Consulting Group study found that “AI leader” firms (those advanced in adoption) expect 60% higher AI-driven revenue growth and nearly 50% greater cost reductions by 2027 than others.

These leaders integrate AI into both cost-side and revenue-generating activities.

For example, AI-driven personalization in e-commerce can increase conversion rates, while predictive maintenance AI in manufacturing prevents costly disruptions.

Whether through more efficient operations or smarter revenue generation strategies, well-deployed AI tends to improve an organization’s financial performance.

Less talked about but equally important is how AI can improve the employee experience. A common fear is that AI will make work more impersonal or stressful, but studies often show the opposite is true when AI is used.

For one, AI tools can reduce menial work that leads to burnout.

In one survey, as much as 86% of American workers said they would take a pay cut if AI could help them get all of their work done and allow them to work less.

When an algorithm handles scheduling, data entry, or other basic tasks, employees are left with more meaningful tasks.

According to one report, 85% of workers said AI helps them focus on their most important work.

In addition, AI in HR can personalize the work experience.

It can provide employee support, like chatbots that answer HR questions instantly or wellness apps that provide personalized coaching.

All these applications make employees feel more supported and empowered in their jobs.

AI can be a powerful engine for innovation and creativity within an organization. It is used to generate ideas, quickly prototype solutions, or even discover new products and services.

It’s no wonder that business leaders are turning to AI to find their competitive edge.

As much as 46% of executives say differentiating their products and services is among the top objectives driving their investments in AI.

Essentially, companies are betting that AI will help them do something new or better that sets them apart in the market.

AI doesn’t replace human creativity, but it can augment the R&D process by providing fresh insights and handling the work that often hinders innovation.

Adopting AI isn’t completely risk-free, and genuine concerns about its impact on jobs, skills, security, and ethics exist. HR professionals and business leaders should weigh these cons carefully.

As AI and automation become more capable, it’s natural for employees to worry: Will my role be next?

For many employees, this can create anxiety about job security and the future of their work. While companies increasingly invest in AI, they must be aware of its risks to morale and long-term workforce trust.

Surveys consistently show high levels of worry about this issue, with over half of employed people (52%) concerned that AI could replace their jobs.

These fears are not unfounded.

Some companies are already using AI to automate customer support or basic accounting, and experts predict more disruption to come.

A major study by the World Economic Forum projected that between 2025 and 2030, these big changes in the job market will affect about 22% of all current jobs.

In other words, AI will both eliminate and create jobs. However, the transition can be painful for those whose roles are disrupted.

While the addition of AI in the workplace will lead to new roles, workers will need time and support to build the right skills.

Within the next five years, an estimated 70% of the skills used in today’s jobs will have changed, with AI being the primary driver for this modification.

Yet, according to a 2024 Randstad survey, companies adopting AI have been lagging in training or upskilling employees on how to use AI in their jobs.

In practice, this means an organization might roll out a new AI platform, but employees either don’t yet understand it, don’t trust it, or don’t know how to integrate it into their work.

Despite this urgency, access to AI training remains uneven. Some of the most pressing gaps show up across age, gender, and education.

Currently, 71% of AI-skilled workers are men, and only 29% are women.

To make matters worse, jobs typically held by women are far more vulnerable to AI disruption than those held by men. According to a recent UN report, AI in the workplace is nearly three times more likely to replace a woman’s job than a man’s.

Furthermore, only one in five Baby Boomers have been offered AI training opportunities, compared to nearly half of Gen-Z. While job growth favors those with college degrees, roles for workers without one continue to shrink.

As Google DeepMind CEO Demis Hassabis recently stated, “Even teens who aren’t learning AI tools now may already be falling behind.”

Without deliberate investment in reskilling and learning programs, organizations risk leaving large segments of their workforce behind and inadvertently limiting the adoption and full potential of AI.

AI runs on data, both often sensitive and with massive amounts of it. That reliance, however, opens the door to serious security risks: leaks, breaches, and misuse.

Employees might unknowingly feed confidential info into generative tools that store or train on that data, with real-world consequences such as leaked source code or exposed IP.

It’s not just theoretical, as tech giant Samsung had to ban employees from using ChatGPT after sensitive source code was leaked into the AI’s dataset.

In fact, by late 2023, 75% of businesses were implementing or considering bans on ChatGPT and similar AI tools on work devices precisely because of concerns about data security.

However, the responsibility goes both ways, and companies feeding employee data into AI tools must do so in compliance with privacy laws and regulations.

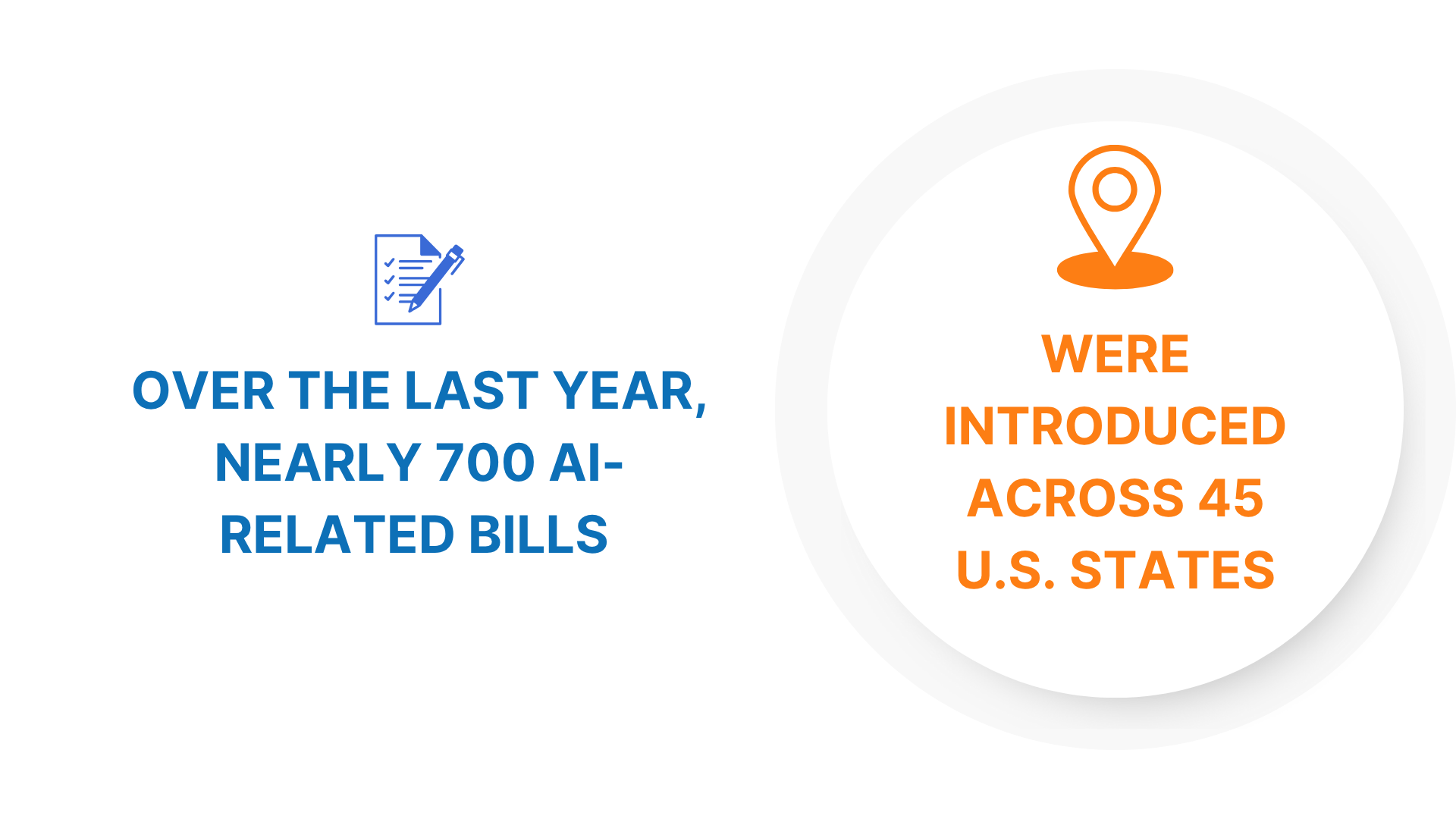

Many states are starting to address these concerns by introducing AI regulations. Over the last year, nearly 700 AI-related bills were introduced across 45 U.S. states, with nearly 20% being enacted into law.

For example, a new law in New York City requires employers to conduct annual third-party “bias audits” for any AI-driven hiring tools they use, in part to ensure fairness and transparency.

Integrating AI in the workplace means navigating these complexities – balancing innovation with compliance, protecting sensitive data, and building systems that employees and regulators alike can trust.

As AI’s use becomes more prevalent, there’s a risk that employees might start to “outsource” their thinking to their phone and AI tools or use them as a crutch for every decision.

What’s wrong with that, you might ask – isn’t the whole point to rely on the AI’s superior abilities?

The issue is that AI is not infallible.

AI hallucinations are still quite common, and they can still make errors or generate biased results based on the data it was trained on.

In a Slack Workforce study, executives listed AI reliability and accuracy among their top worries in adopting the technology.

Then, there’s also a human capital concern: as workers lean on AI for everyday tasks and answers, they risk losing some of their own skills over time.

Early research is raising red flags about this cognitive offloading.

In one study, participants who heavily relied on AI tools showed a diminished ability to evaluate information and solve problems on their own.

The key is finding the right balance.

AI should be a tool that augments human capability, not a replacement for human judgment.

Last but certainly not least, AI in the workplace raises ethical questions that organizations must address.

These range from issues of bias in datasets to the use of black-box algorithms and even the moral implications of delegating certain decisions to machines.

AI systems learn from data, and if that data reflects historical biases, the AI can perpetuate or even amplify those biases in unexpected ways.

A few years ago, Amazon discovered its experimental AI hiring tool was systematically downgrading CVs that included indicators of being female, because it had trained on data from the male-dominated tech industry.

Even more recently, TikTok videos of AI “recruiters” conducting job interviews had gone viral, where the humanoid bots glitched or shut down mid-conversation.

While it made for entertaining content, it also created a debate about the bigger issue of machines making decisions that affect people’s lives and the dehumanization of hiring.

Ignoring the ethical dimension is risky from a legal perspective and goes against the principles of building a workplace where trust and fairness are valued.

Given the pros and cons of AI in the workplace outlined above, how can organizations adopt AI in a way that maximizes the benefits while minimizing risks?

In this section, we go over the three critical components to getting AI deployment right: strategic planning, establishing policies, and investing in training and support.

Nearly half (49%) of technology leaders in a 2024 PwC survey said AI was already “fully integrated” into their company’s core business strategy.

While the idea of driving value from AI is exciting, companies must use it strategically. Instead of using AI just because everyone else is, tie your efforts to specific business goals and pain points.

Ask yourself and your leadership team:

Once you’ve identified a few high-impact, achievable use cases, start there.

During planning, the end-users of AI systems should be involved in the conversation.

If you’re implementing AI for sales forecasting, get input from the sales managers about their needs and concerns. Bring in cross-functional stakeholders, like HR, IT, and managers, to get a full view of technical needs, employee impact, and workflow integration.

Next, assess whether you’re truly ready.

Running an internal AI readiness audit can clarify your next steps.

As one McKinsey report summarized, organizations that redesign workflows and put proper structures in place for AI deployment are the ones seeing bottom-line impact.

As mentioned already in the cons section, the use of AI exposes companies to a host of potential issues.

And yet, about 43% of desk workers in a recent survey said they had received no guidance on how to use AI tools at work, which can lead to misuse or security lapses.

While having formal AI policies is not yet universal, it is increasingly seen as a best practice.

What might an AI policy include?

First, it should set out guiding principles, like a commitment to never trade fairness, transparency, or data privacy for efficiency. Larger organizations may go further and set up an AI or data ethics committee to review high-impact deployments and keep accountability in check.

The policy can define approval processes for new AI tools, such as requiring vetting by IT and legal, especially if they involve sensitive data or critical decisions.

It’s also wise to include data management rules, such as who owns the data used by AI, how long it is kept, how consent is obtained if personal data is involved, and other decisions.

These guidelines protect against the kind of missteps that lead to breaches or ethical lapses.

Finally, even the best AI strategy and the most thorough policies will be meaningless if your people aren’t equipped to use the new tools.

For these reasons, companies must create a growth-oriented culture that focuses on developing people alongside technology.

They can start by assessing the training needs: different groups may need different levels of education. Use tutorials, workshops, or your LMS to deliver targeted training modules.

Front-line employees might need hands-on instruction and practice with the new tools. Managers and leaders, on the other hand, may need training on how to integrate AI insights into their decision processes, or how to communicate about AI changes to their teams.

Don’t assume tech familiarity, as even younger, digitally savvy employees might not intuitively know how to get the best out of a specific AI application.

Lastly, bear in mind that soft skills become more important, not less, in an AI-powered workplace. Skills that AI can’t replace, like creativity, empathy, and ethical reasoning deserve increased emphasis in professional development programs.

In conclusion, the pros and cons of AI in the workplace reveal that while AI can drive remarkable productivity, insight, and innovation, it also requires careful handling of challenges around jobs, skills, security, and ethics.

The call to action for HR leaders and business decision-makers is clear: act now to harness AI’s potential but do so thoughtfully and inclusively.

The future isn’t about AI replacing humans, but about building environments where people and machines collaborate to achieve more than either could alone.

Senior Content Writer at Shortlister

Browse our curated list of vendors to find the best solution for your needs.

Subscribe to our newsletter for the latest trends, expert tips, and workplace insights!

Explore the evolving landscape of HR, offering a glimpse into how AI is poised to shape the future of human resources management.

Explore the implications of technology on the inevitable shift of the labor market and what this would mean for the future of work.

Are you ready to navigate the uncharted waters of AI regulation in 2025, where innovation races ahead, but the rules are still being written?

Which skills are futureproofed with an irreplaceable “human touch,” and how are emerging technologies driving the evolution of workforce skills?

Used by most of the top employee benefits consultants in the US, Shortlister is where you can find, research and select HR and benefits vendors for your clients.

Shortlister helps you reach your ideal prospects. Claim your free account to control your message and receive employer, consultant and health plan leads.