In This Post:

Presenters:

Maya D’Eon

Head of Clinical

Ricardo Rei

Head of AI Research

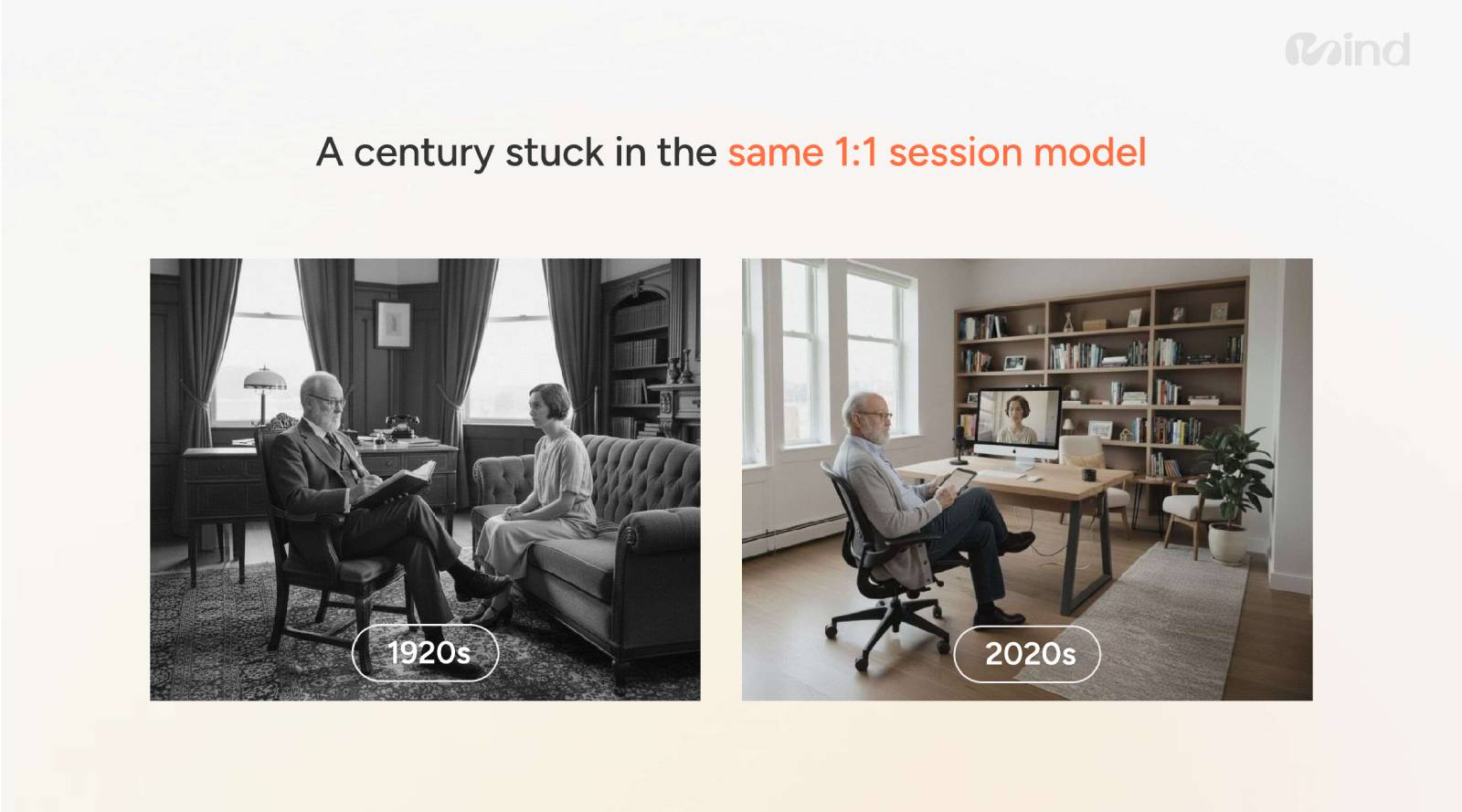

Mental health has become one of the defining health challenges of our modern times and the new generations. Despite increased awareness, investment, and innovation, the dominant model of care has barely evolved in over a century. One-on-one therapy sessions, introduced in the 1920s, still remain the primary form of support. People have these 45-minute sessions that really limit a human clinician’s ability to deliver care to multiple people simultaneously. They might treat between six and eight people within a day.

Demand outpaces supply, and the long waitlists, high costs, and limited access continue to leave millions without timely care. At the same time, artificial intelligence is rapidly entering the mental health landscape. People are already using tools like Claude and ChatGPT, which were not built for mental health purposes but for information seeking. They are already using it for psychotherapy and counseling. What’s more important, ChatGPT, which is the arguably the most used generative AI platform, has reported that 1 million users every week show some suicidal intent.

As highlighted in our recent webinar, Maya D’Eon, Head of Clinical at Sword Health, and Ricardo Rei, Head of AI Research at Sword Health, showed us that building AI for mental health requires a fundamentally different approach than building general-purpose conversational models. AI-powered tools promise scalability, affordability, and round-the-clock availability. Support that can reach people where and when traditional care cannot. But with this promise comes significant risk.

About Mind by Sword Health

Mind by Sword Health is a daily mental fitness program that’s built for action, to help people overcome mental blocks. Mind Combines clinical expertise, wearable technology, and the power of Phoenix AI.

Sword Health is doing that with state-of-the-art technology, industry-leading service models, and a clinical foundation that no one else can compare with.

The Promise and Peril of AI Mental Health Support

AI is already being used by millions for emotional support, advice, and companionship. Large language models (LLMs) are frequently engaged in conversations that resemble psychotherapy or counseling, sometimes involving highly sensitive and vulnerable disclosures. This reality underscores both the opportunity and the responsibility of AI developers.

The promise of AI mental health support is clear:

- Scalability – AI can serve large populations simultaneously.

- Accessibility – Support is available anytime, without waitlists.

- Affordability – Many tools offer low or no-cost access.

- Convenience – Users can engage discreetly through familiar digital interfaces.

However, most existing AI systems were not designed specifically for mental health. When repurposed for emotional support, they can exhibit dangerous limitations.

- Overly Reassuring Responses. Many models prioritize likeability, validating every feeling without challenging unhealthy patterns or recognizing risk. If you spend a lot of time with these LLMs, you might notice that it’s often saying, yes, you’re right, or that’s great. There’s this feeling of like trying to make you feel good in the moment. But that can be quite counter therapeutic in a mental health support context where likability is essential. You wanna have strong rapport, but you also wanna build insight.

- Performative Therapy. AI may “sound” therapeutic, using empathetic language, without applying principled clinical reasoning underneath.

- Lack of Context. Generic models often operate in isolation, without integrating personal history, psychosocial factors, or evolving risk signals.

- Reactive Behavior. Traditional AI responds only when prompted, failing to notice patterns, initiate check-ins, or suggest timely interventions.

In mental health contexts, these shortcomings are not merely technical, and they can be harmful.

Rethinking How Mental Health AI Is Built

Developing AI for mental health requires an entirely different approach. General benchmarks used in AI development, such as math accuracy or coding performance, are insufficient for evaluating safety, nuance, and clinical appropriateness. Instead, mental health AI must be:

- Action-guiding, not just conversational

- Grounded in real-life context

- Evidence-led

- Non-judgmental and bias-aware

- Boundaried and safe

This shift begins with how data is created, evaluated, and validated.

Purpose-Built Data and Clinical Validation

Unlike general models that rely heavily on scraped or synthetic data, mental health AI must be trained with intentional design and clinical oversight. The approach presented in the webinar emphasizes user simulation with clinical input. The process includes:

- Setting the Stage

Simulated users are created with specific mental health contexts and journeys, alongside clear instructions for how the model should respond.

- Generating Conversations

The model engages in realistic dialogues reflecting emotional complexity and ambiguity.

- Clinical Validation

Clinical experts directly edit, refine, or regenerate responses to ensure safety, appropriateness, and therapeutic integrity before the data is used for training.

Human validation is essential. Without it, models risk converging on shallow “therapy-speak” that lacks depth, consistency, and safety.

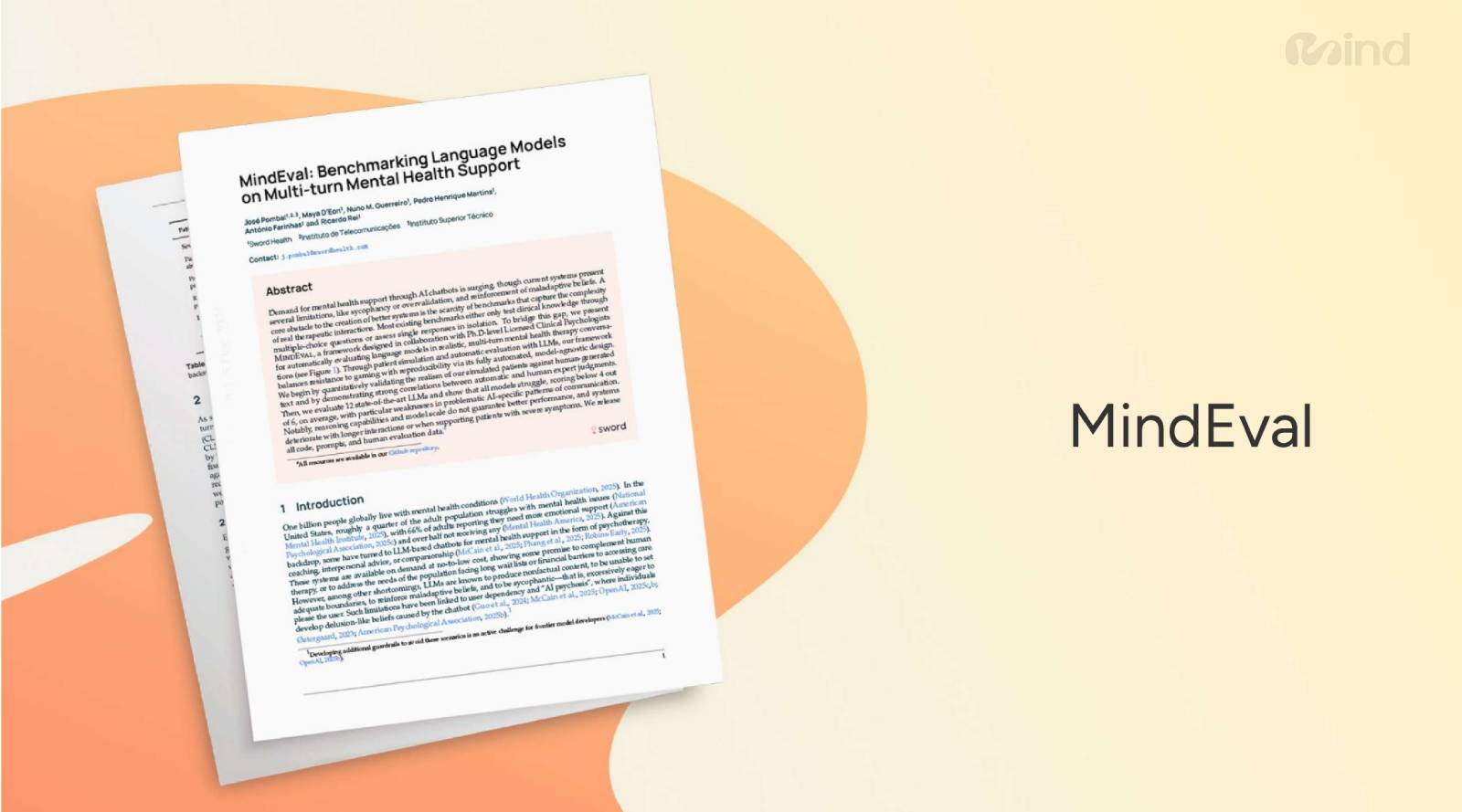

Introducing MindEval: Measuring What Actually Matters

One of the biggest challenges in mental health AI is evaluation. There is no widely accepted benchmark for automated mental health support quality or safety. To address this gap, the team introduced MindEval, a purpose-built evaluation framework designed specifically for mental health conversations. MindEval assesses:

- Clinical appropriateness

- Risk sensitivity

- Longitudinal consistency

- Response quality across nuanced, real-world scenarios

This goes far beyond simple content moderation. It reflects how clinicians assess conversations by tracking signals over time rather than reacting to isolated statements.

Dynamic Risk Profiles and Mental Health Guardrails

A key insight from the webinar is that mental health risk is dynamic. Signals of distress or self-harm rarely appear as a single explicit statement. Instead, they emerge gradually through tone, phrasing, withdrawal, or subtle expressions of hopelessness. Generic AI safety guardrails struggle here because they are often:

- Response-specific rather than longitudinal

- Overloaded with broad safety rules

- Insensitive to nuance and context.

The model addresses this by building a dynamic risk profile for each user. This profile:

- Captures risk factors, warning signs, and protective factors

- Accumulates insights across multiple interactions

- Updates continuously as new information emerges

- Informs more accurate, context-aware responses over time.

This mirrors how clinicians think, considering patterns, changes, and trajectories rather than single moments.

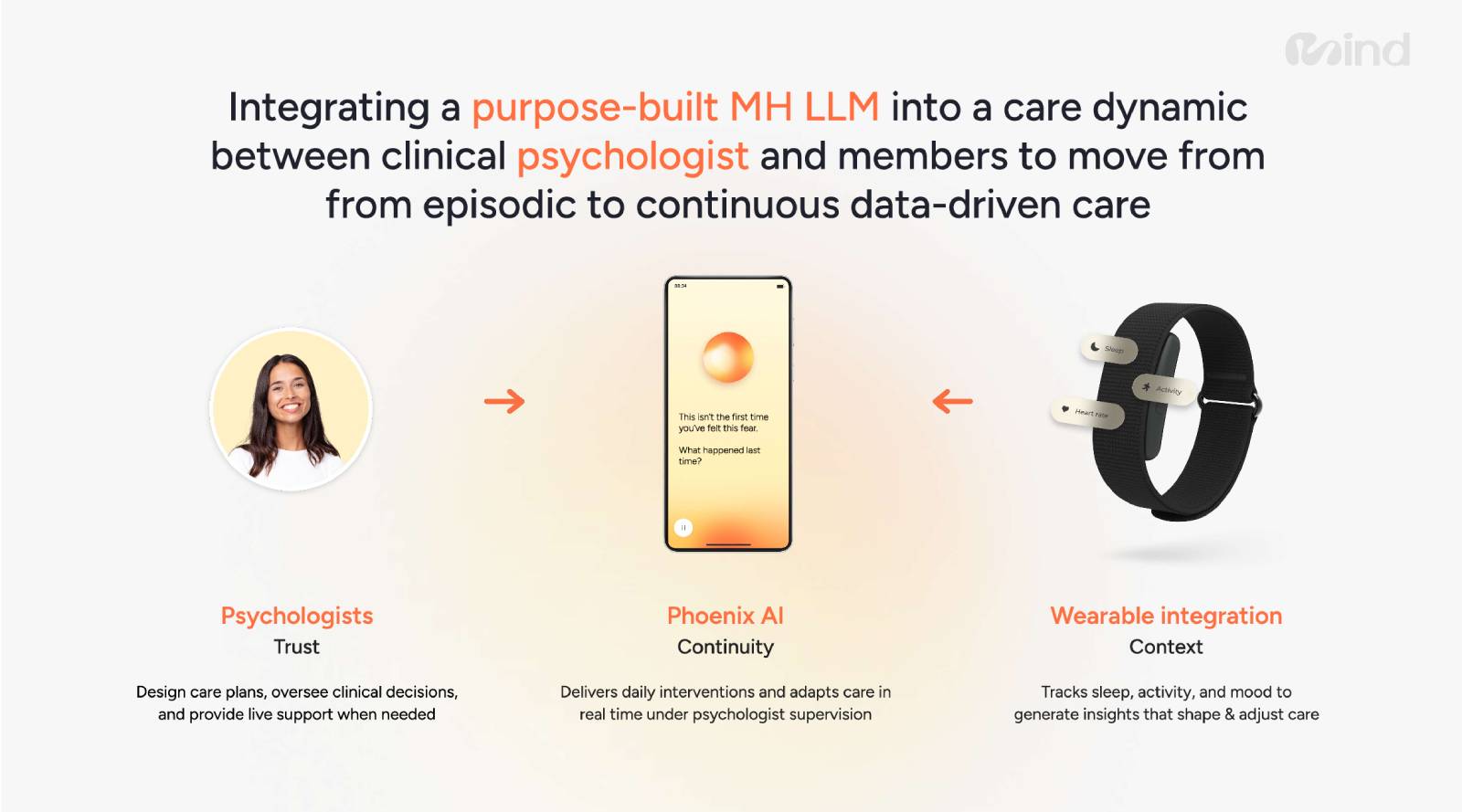

Integrating AI Into a Broader Care Model

Importantly, this approach does not position AI as a replacement for clinicians. Instead, it integrates AI into a care dynamic that includes psychologists, wearable data, and ongoing human oversight. In this model:

- Wearables provide contextual signals like sleep, activity, and mood.

- Psychologists design care plans, oversee decisions, and step in when live support is needed.

- AI systems deliver daily interventions and adapt support in real time, under clinical supervision.

The result is a shift from episodic care to continuous, data-driven mental health support.

Finally, success is measured not by engagement metrics or conversation length, but by clinical outcomes. Progress is tracked against each individual’s baseline using validated tools such as PHQ-9 and GAD-7 scores. Meaningful improvement and recovery becomes the true benchmark of effectiveness.

Get in touch with the Sword Health team today!

As AI becomes more embedded in mental health solutions offered to employees and members, safety, rigor, and accountability are non-negotiable. Tools that merely sound supportive are not enough. Mental health AI must be built with purpose, evaluated with clinical insight, and deployed within responsible care frameworks.

The future of mental health support depends not just on what AI can say—but on how thoughtfully it is designed to listen, assess, and act.

For more information, contact:

David Zhang – d.zhang@swordhealth.com

In case you missed it: Log in to access the full Meet A Vendor Presentation!

Not a subscriber? Request access.

Did you find this article interesting?

Don’t keep this knowledge to yourself – share it on your social media channels and spark engaging conversations!